Today, I’m not doing an exercise from the repository, but I looking into one of the basic goto algorithms in the world of QC, the Quantum Approximate Optimization Algorithm (QAOA). The code snippets are taken from the very instructive tutorial published here.

A common application of this algorithm is to solve so called Quadratic Unbound Binary Optimization (QUBO) problems. The goal of these problems is to find the binary feature vector x, that minimizes an at most quadratic function f:

Therefore, the solution to the problem is a series of zeros and ones. As a matter of fact, a large class of problems can be formulated as such a QUBO problem.

QAOA algorithm

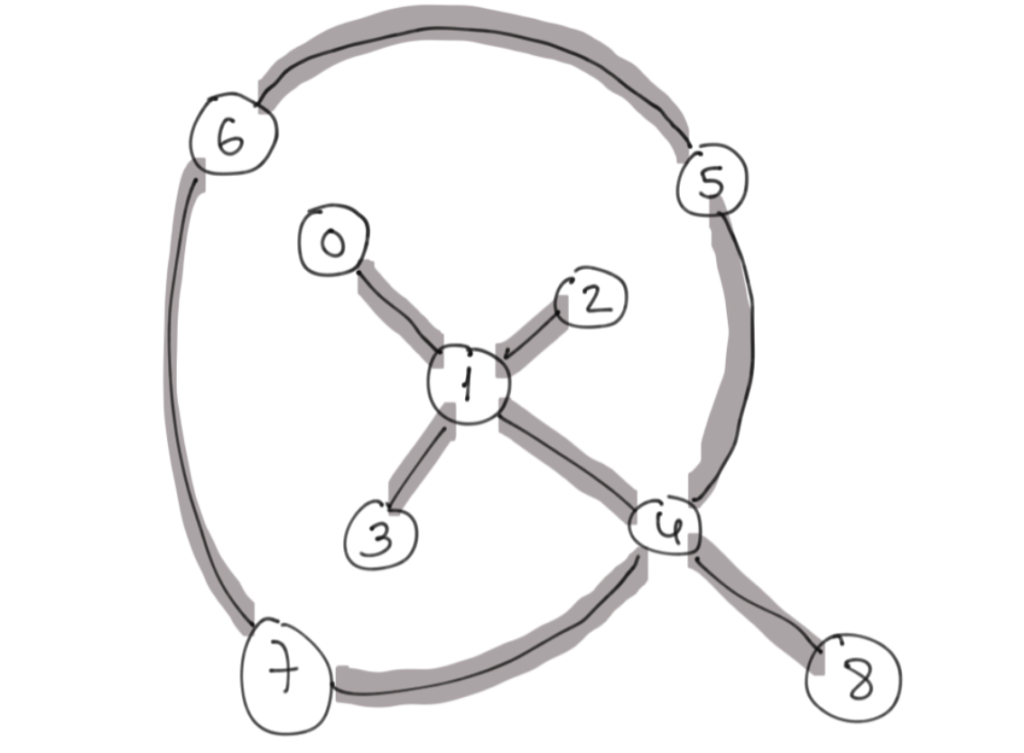

The QAOA algorithm employs a very simple idea. A quantum system is first initialized in a promising state (ideally close to the expected global minimum). Then a series of time evolutions is performed, the time evolutions apply alternating the Hamiltonian encoding the problem and a mixer Hamiltonian, to leave meta-stable local optima states.

In the tutorial, the parameters Alpha and Gamma were optimized using a VQE scheme.

Implementation in PennyLane

First, you’ve got to define a Hamiltonian that characterizes your problem, here’s a toy Hamiltonian

import pennylane as qml

H_P = qml.Hamiltonian(

[1, 1, 0.5],

[qml.PauliX(0), qml.PauliZ(1), qml.PauliX(0) @ qml.PauliX(1)]

)and your mixer Hamiltonian

Then define a time evolution step

def qaoa_layer(params):

qaoa.cost_layer(params[0], H_P)

qaoa.mixer_layer(params[1], H_C)Now, you can combine the initialization and the time evolution sequence to a single circuit

wires = range(4)

depth = 2

def circuit(params, **kwargs):

for w in wires:

qml.Hadamard(wires=w)

qml.layer(qaoa_layer, depth, params[0], params[1])And having a cost function in place, that evaluates the problem function …

dev = qml.device("qulacs.simulator", wires=wires)

@qml.qnode(dev)

def cost_function(params):

circuit(params)

return qml.expval(cost_h)you can run an optimization procedure, to find a good parameterization for the time evolutions.

optimizer = qml.GradientDescentOptimizer()

steps = 70

params = np.array([[0.5, 0.5], [0.5, 0.5]], requires_grad=True)

for i in range(steps):

params = optimizer.step(cost_function, params)

print("Optimal Parameters")

print(params)Interesting observation

The procedure outlined in the tutorial yield good results, providing the highest probabilities for sensible configurations. However, after I went on to modify the algorithm, and increased the number of evolutions from 2 to 5, a less favorable configuration yielded the highest probability. I’ll have to do some research, into why this happens.